by Diane Roux, Analyst at XAnge

Data Centers, a Hot Topic Intimately Linked to Scaling AI

Why Data Centers Are at the Center of a Global Tech & Energy Storm

AI is on fire, (quite literally). Interest in Generative AI is rising, causing a boom in data center construction, and a need for new infrastructure.

Why new infrastructure ? Well, the emergence of power-dense AI specialized chips has changed the market. The strong computing power of these chips comes with the byproduct of high and concentrated levels of heat being produced. On top of chips being more dense, server racks are also increasing in density, with AI training requiring chips to be physically close to each other. This makes things tricky, since to function properly and avoid hot pots, servers need to be kept cool (respect temperature requirements).

Energy is clearly a bottleneck.

This deep dive will explain the basics around data centers, the backbone of the digital economy, and our perspective on liquid cooling technologies.

The AI Boom is Fueling Explosive Data Volumes & Compute Demand

It’s safe to say we’re all heard about generative AI’s impact on reshaping industries. This progress has been benefiting the entire tech and startup ecosystem, thanks to the decoupling value and cost of data, and cheaper cost of compute.

But AI’s promise is utterly dependent on physical computing infrastructure. Power demand is surging rapidly, and Google is a great example of this. In just 4 years, they’ve doubled their data center energy use (30.8 million MW-hours). To quantify that, in 2024 data centers made up 95.8% of Google’s total electricity usage.

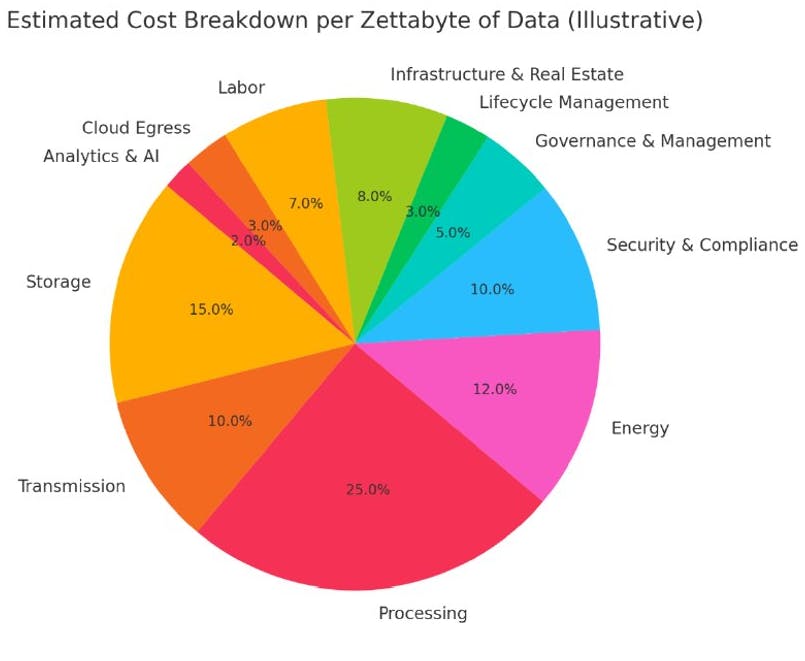

The graph to the right demonstrates the need for more data center and server chip efficiency (Processing + Energy = 37% of the cost per Zettabyte of Data).

Shoutout to our portfolio company Vertical Compute, targeting the processing challenge & need for more server chip efficiency (by solving the “memory wall”).

The Urgency: Data Centers, the Physical Backbone, Face Critical Bottlenecks

With power demand surging, grid vulnerabilities are surfacing. Data centers already consume 1.5% of global electricity. The IEA projects world data-center power use to double by 2030 (~3% of global electricity supply), and both hyperscale clouds and AI training (e.g. GPT-class models) are the main drivers of this growth.

These bottlenecks can be categorized in the following:

Scale: Need for increased power, more data center efficiency, and more server chip efficiency

Deploy: Need for reduced semiconductor manufacturing costs, optimized power consumption on edge devices, and efficiently running local models.

Secure: Need to protect data privacy during training & inference, ensuring unbiased and fair models, and scale open access AI infrastructure

Overall, there is a huge tech infrastructure investment risk and opportunity.

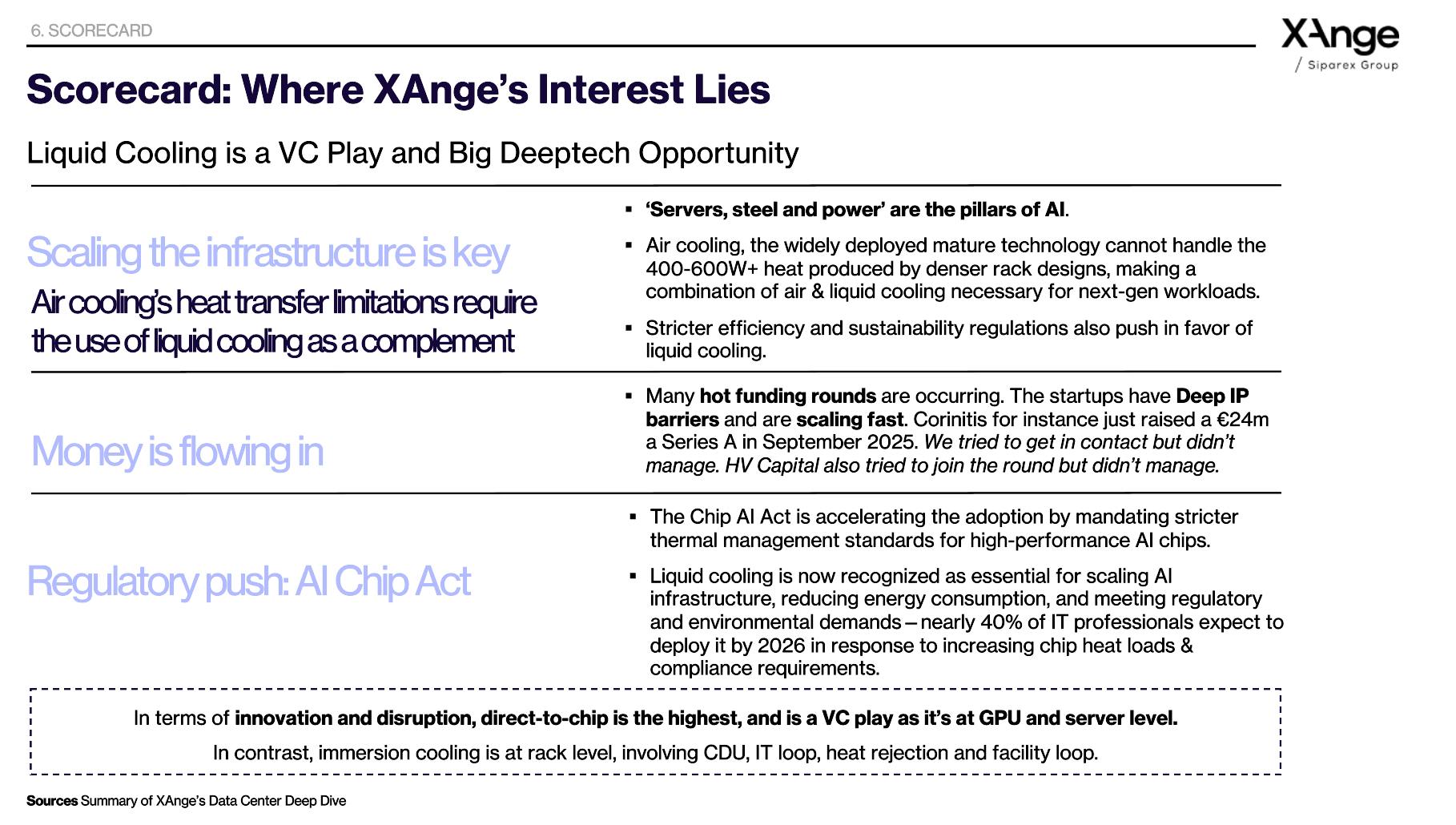

Indeed, making models more efficient is key (research breakthroughs will enable faster reasoning and better use of data), with today’s model development outpacing data center construction. However, data center construction and scaling is also central, with “servers, steel and power” being the pillars of AI.

But How do Data Centers Actually Work?

European Context and Key Terms

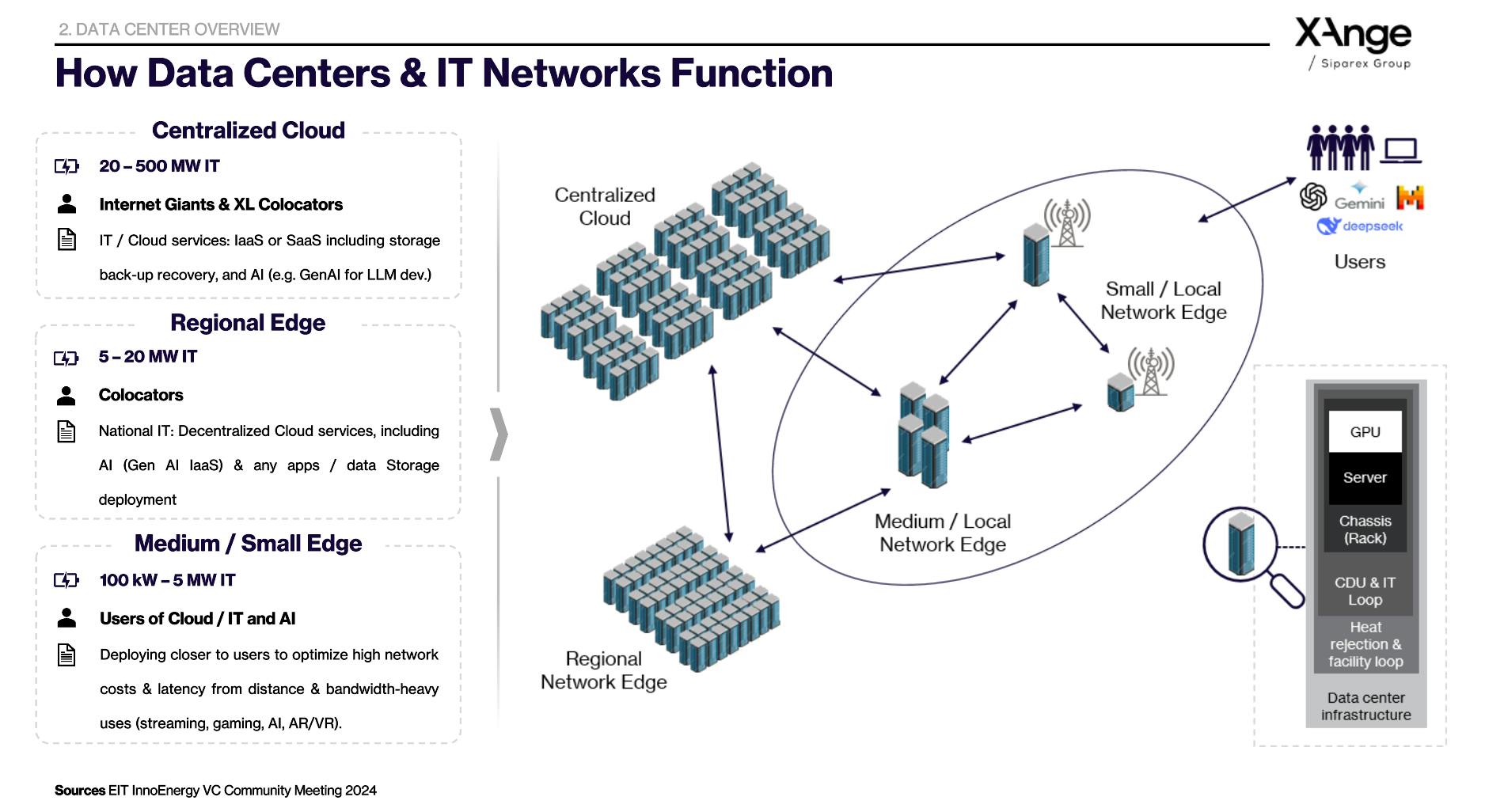

What is colocation ?

- Colocation is a model where businesses rent space, power, and network connectivity within a shared, high-security data center facility, instead of building and operating their own private data centers.

- Companies install their own physical servers and network hardware in rented racks, cages, or suites.

- Benefits: infrastructure cost savings, scalability without major capital investment, physical & network security, reliability (power/cooling)

- Typical Clients: cloud providers, SaaS companies, financial institutions, telecom operators, and growing enterprises needing resiliency and compliance.

Overview of the infrastructure

- Data centers host racks of servers (compute/storage), all secured with robust cooling, backup power, and advanced networking.

- The white room is the primary operational area of a data center, housing IT equipment such as servers, storage systems, and networking gear. This secure, climate-controlled space is designed for optimal equipment density, efficient airflow, and future scalability, distinguishing it from support areas containing power & cooling.

- Modifying the white room is highly challenging due to its precisely engineered environment.

- Different layers (cloud, regional, edge) are interlinked supporting national, regional, and local digital workloads.

Interconnection with IT networks

Data Center Market Map

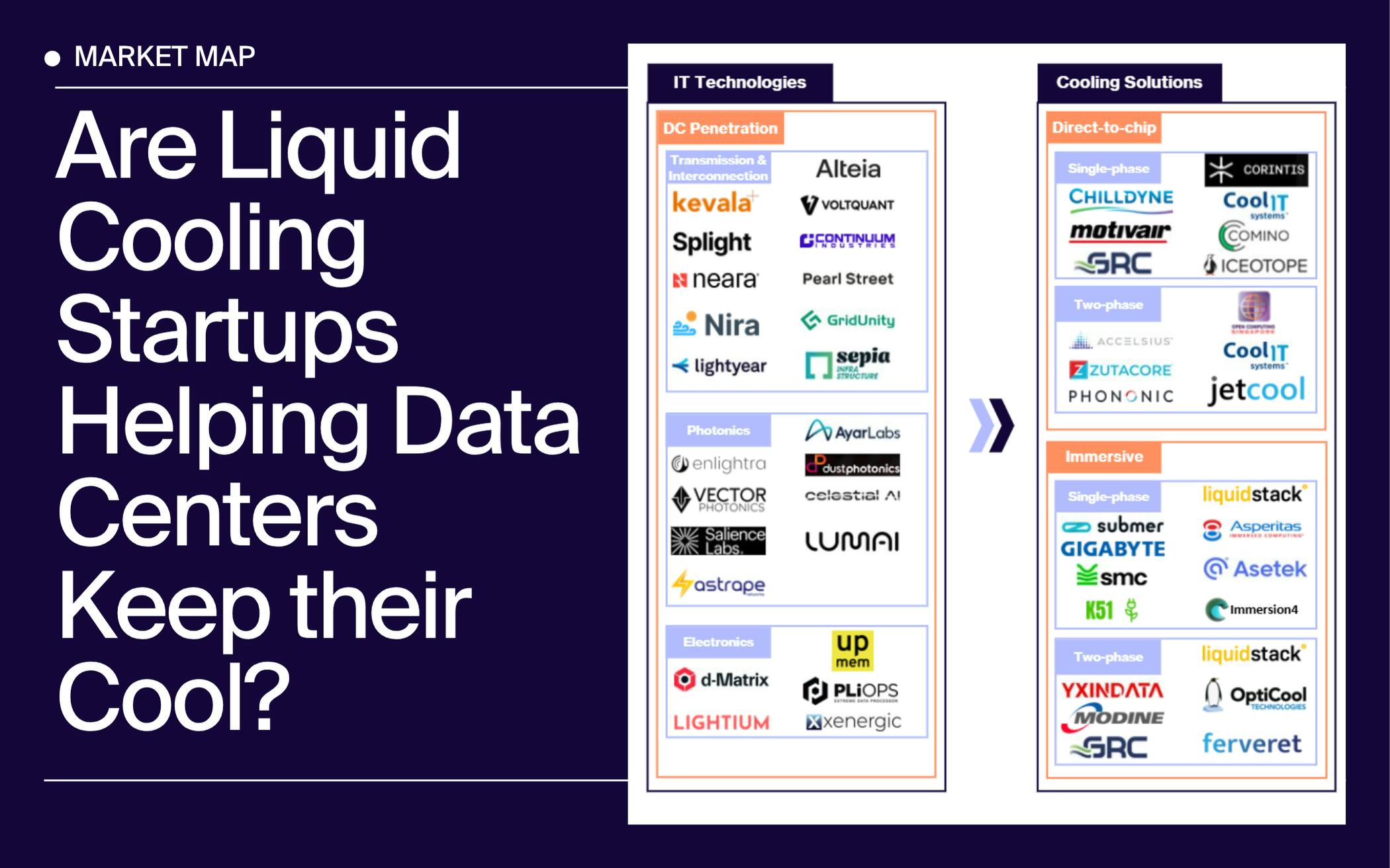

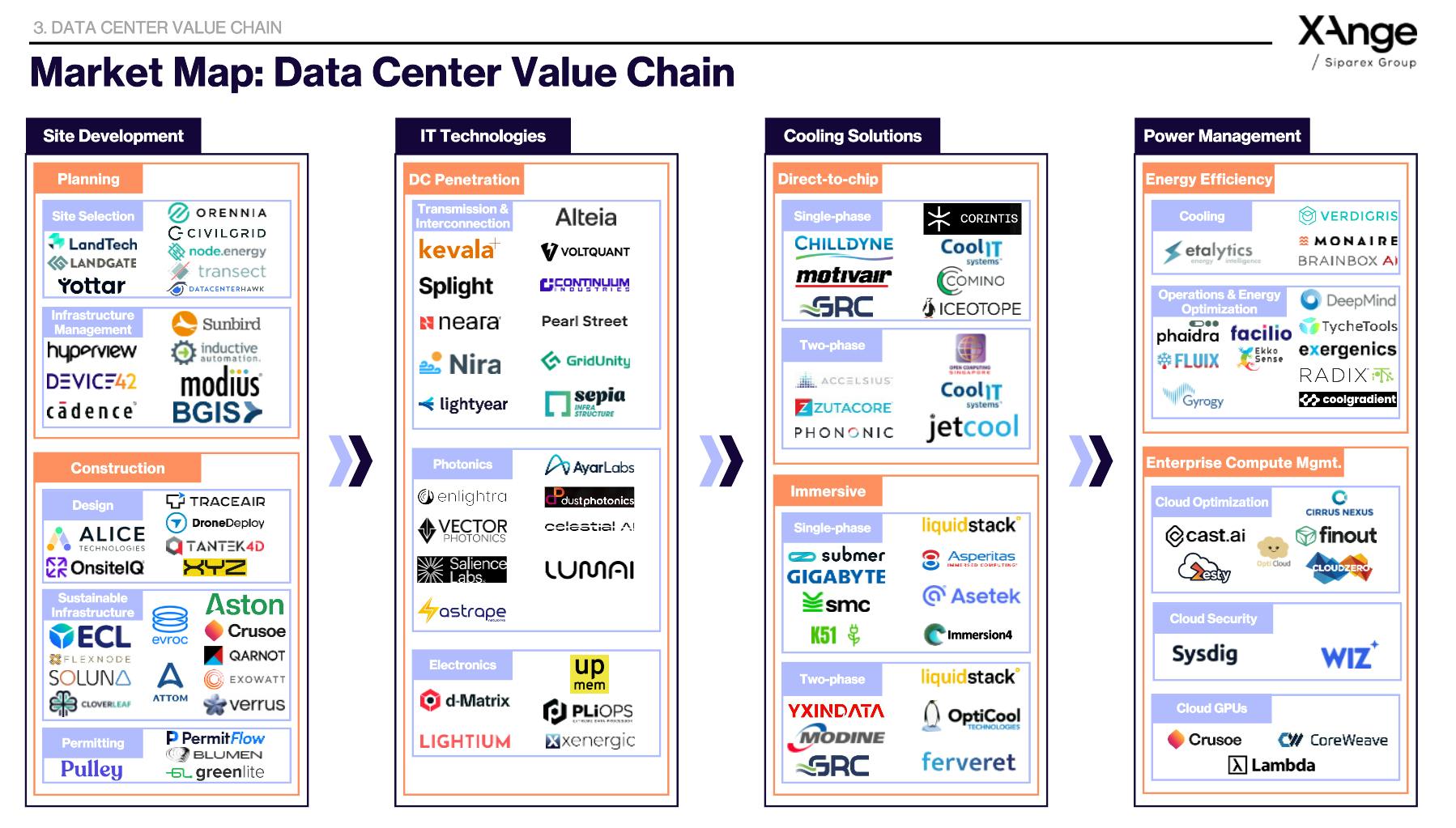

Interesting observation: there is an overrepresentation of US companies, with European and Asian companies forming sizeable, but clearly smaller minority.

My reasoning: The US is home to most hyperscale cloud and AI operators (Amazon AWS, Google, Microsoft, Meta, Oracle), who drive demand and investment along the entire stack, from site selection to cooling and power management. Moreover, for cooling specifically, the US was a pioneer in funding startups in the space thanks to the Cooler Chips program they launched in 2022.

Liquid Cooling Tech Deep Dive: Addressing the Energy Bottleneck

Now getting to the core of the deep dive.

The increasing interest in Generative AI has not only caused a boom in the construction of Data Centers, but has also been transforming the infrastructure inside.

Indeed, chips generate heat when they run, but to operate efficiently, they must be kept cool at specific temperatures.

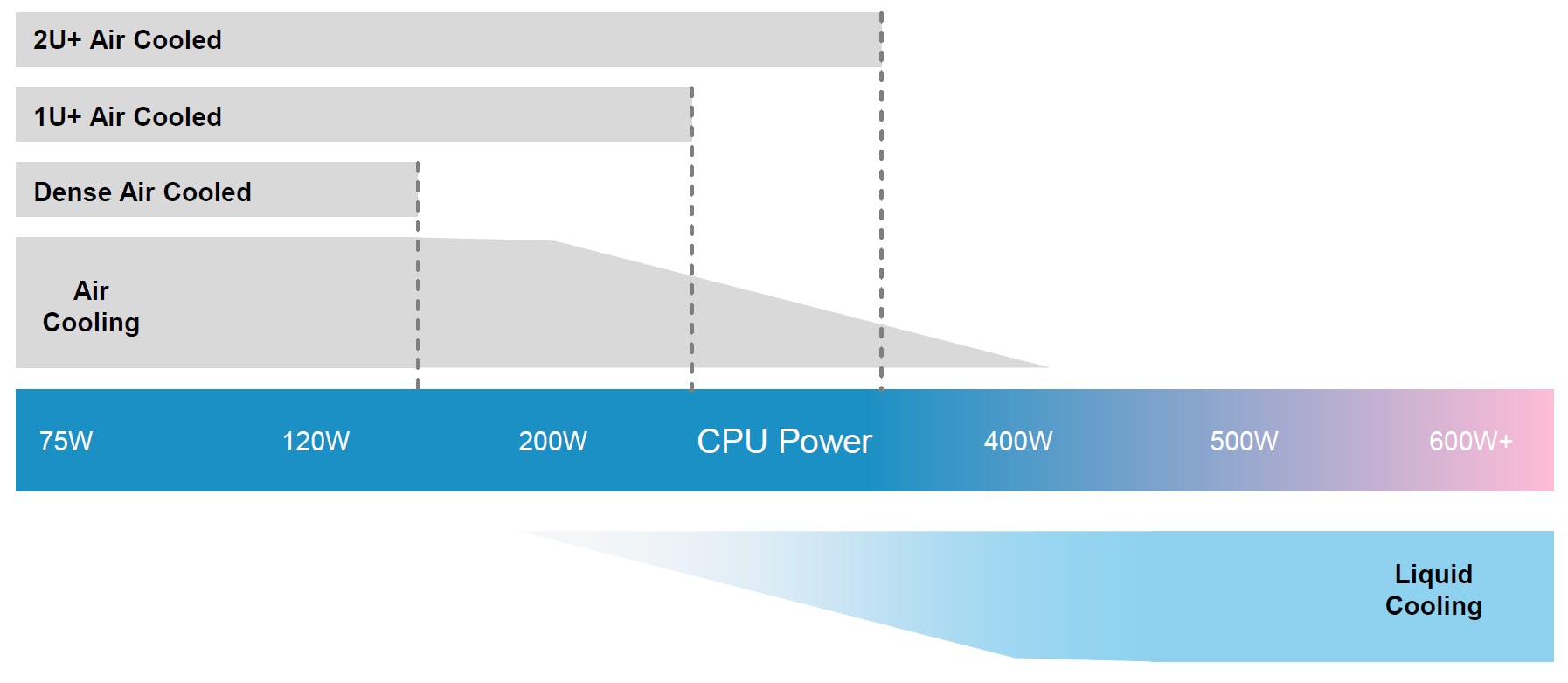

The status quo for cooling is: air cooling. However, with the emergence of power-dense AI specialized chips, and servers also increasing in density, the byproduct is high and concentrated levels of heat being produced.

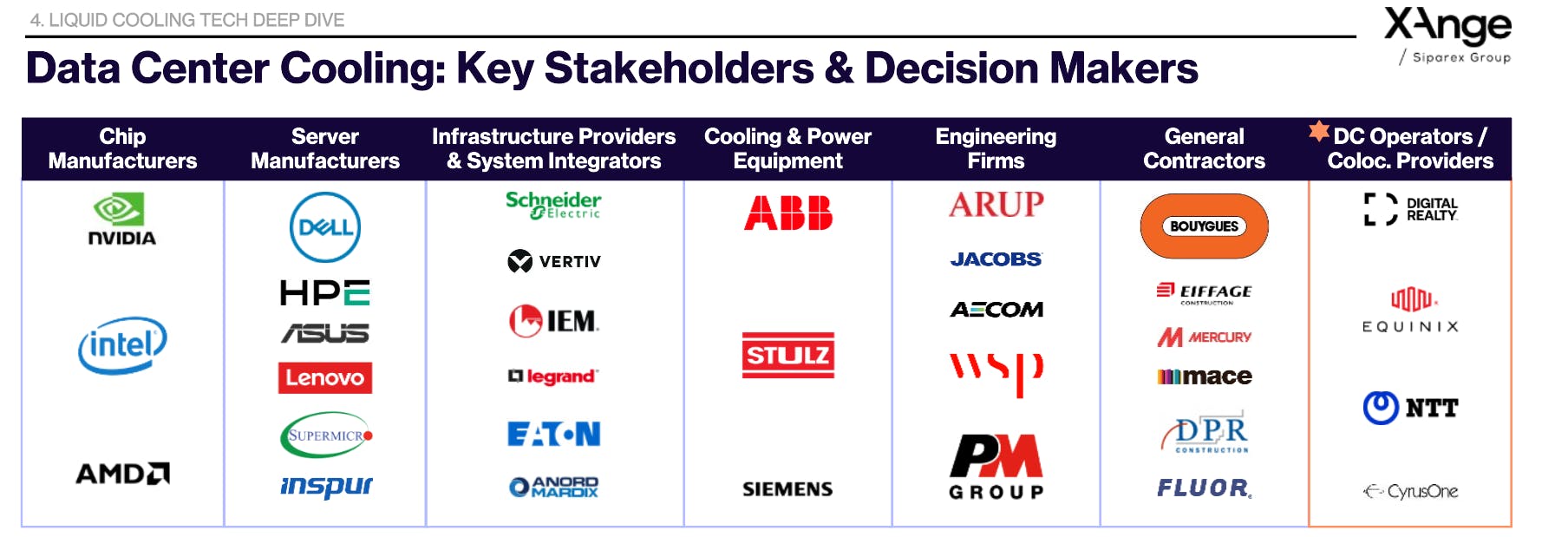

Key Stakeholders & Decision Makers for Cooling Strategies

In modern data centers, liquid cooling solutions are typically installed within controlled environments known as white rooms.

At the heart of these decisions are data center operators and colocation providers, who serve as the ultimate decision-makers and ongoing managers of facility operations. These operators act as central purchasers and arbiters for all critical systems, including cooling solutions. Their responsibilities extend beyond initial equipment selection to encompass contracting with suppliers, overseeing system integration, and managing long-term operational performance. By setting technical and sustainability standards, they ensure that the infrastructure aligns with industry best practices and environmental goals.

Moreover, data center operators serve as primary points of contact for a wide array of stakeholders, ranging from startups innovating cooling technologies to server equipment manufacturers, infrastructure integrators, and equipment providers. They play a pivotal role in choosing which partners and technologies to deploy, balancing performance, cost, and environmental considerations. This vital function positions operators as influencers and gatekeepers in shaping the future landscape of data center cooling solutions.

However, it is also important to note the influence chip and server manufacturers can have. For instance, NVIDIA releasing an AI-specialized chip in 2022 with the label clarifying its need for direct-to-chip cooling was a clear market signal.

By maintaining oversight of the entire lifecycle, from procurement and installation to ongoing management, these operators ensure that cooling systems meet strict service level agreements and performance targets.

The Need for New and Improved Cooling: Liquid Cooling is a Preferable Option for High-Density Data Centers

As computing power increases, so does the challenge of managing heat within data centers. Effective cooling strategies are now central to both operational reliability and cost efficiency.

Pain Point: Heat generation

- IT equipment (servers with CPUs, GPUs, memory) convert electrical energy into heat.

- Safe and reliable operation requires continuous heat removal.

Zero downtime requires two essentials

- Power supply

- Cooling system

To ensure server longevity and stability, modern data centers must deploy robust heat management practices.

Server heat management

- Heat dissipated through conduction, heat spreaders, finned heat sinks, and fans.

- CPUs typically tolerate up to ~80°C; beyond this, they throttle performance or risk failure.

- Downtime of just one hour can cost on average $140K – $540K.

A range of cooling technologies have been adopted, but high-density environments drive the search for more advanced solutions.

Cooling technologies

- Air cooling: Traditional, mature, widely deployed.

- Liquid cooling: Superior heat transfer, increasingly preferred for high-density data centers.

Each approach has pros & cons, but liquid cooling is more efficient for next-generation workloads.

TL;DR: Liquid cooling uses a liquid coolant to remove heat directly from the sources (e.g. CPU / GPU).

Illustration of the Limitations of Air Cooling

Two Main Types of Liquid Cooling

There are two main ways for using liquid to deliver heat from the server to the heat exchanger. Direct-to-chip cooling consists of pipe networks with cold liquid being in contact with the chips. In immersion cooling, the liquid is directly in contact with the chips.

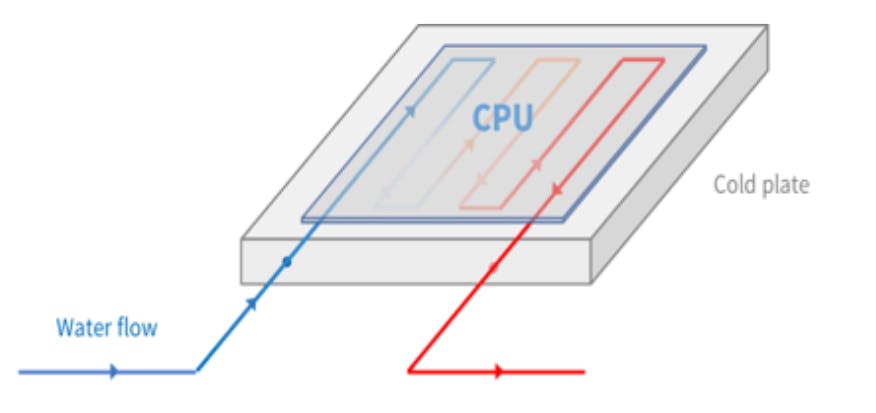

Direct-to-Chip: single phase & two-phase

- Direct-to-Chip or D2C (i.e. cold plate, etc): IT equipment is not in direct contact with the liquid.

- Direct-to-chip liquid cooling only removes heat from the hottest components (CPUs or GPUs), so it cannot remove 100% of the heat produced. Air cooling is still required to remove the residual heat.

- The fluid in a two-phase system evaporates as it absorbs heat, using latent heat for higher efficiency than a single-phase system.

Pros and Cons

Single Phase Cold Plate

- Pros: Easy to install on existing servers, and handles powerful CPUs well.

- Cons: Risk of leaks in server; needs big tubes & pumps.

Two Phase Cold Plate

- Same pros.

- Cons: Can lock in specific server designs, needs a lot of copper, and involves more complicated systems.

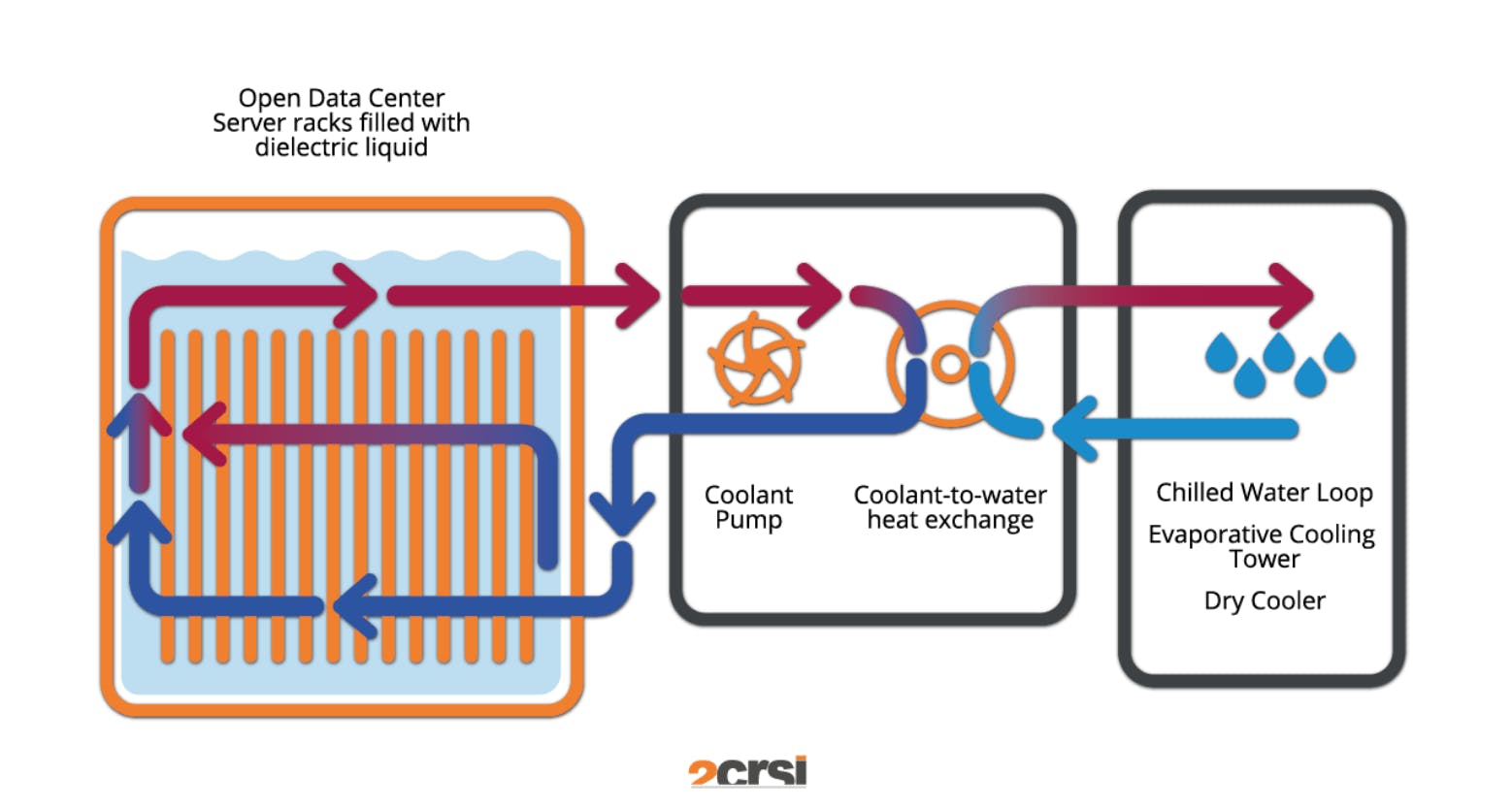

Immersive: Immersion Chassis (single-phase) & Immersion Tub (single-phase or two-phase)

- Immersive: IT equipment is in direct contact with the liquid.

- The Immersion Tub is the most disruptive technology: servers are immersed vertically in a bath of dielectric fluid. This setup provides the greatest cooling capacity and is therefore ideal for HPC. It also has a very low power consumption.

Pros & Cons

Single Phase Immersion

- Pros: Simple & reliable, works with any server. Cost-effective over time

- Cons: Limited cooling level, and requires new maintenance. Servers must be modified; complicated hardware changes.

Two Phase Immersion

- Pros: Can cool very powerful servers, and handles high heat levels.

- Cons: Complex system, and same con as the single phase regarding retrofitting.

To know how the adoption of both type of technologies will evolve, it’s interesting to look at China.

In China, NVIDIA GPUs are less energy efficient, so their need for liquid cooling is even greater. They have adopted single phase immersion cooling (i.e. soaking the server) at Alibaba, but the approach that currently dominates while showing strong potential for scaling is hybrid cold plate cooling (similar to DTC) with air cooling.

Another interesting market signal is Intel CEO’s position on the board of Corintis, a Swiss direct-to-chip startup.

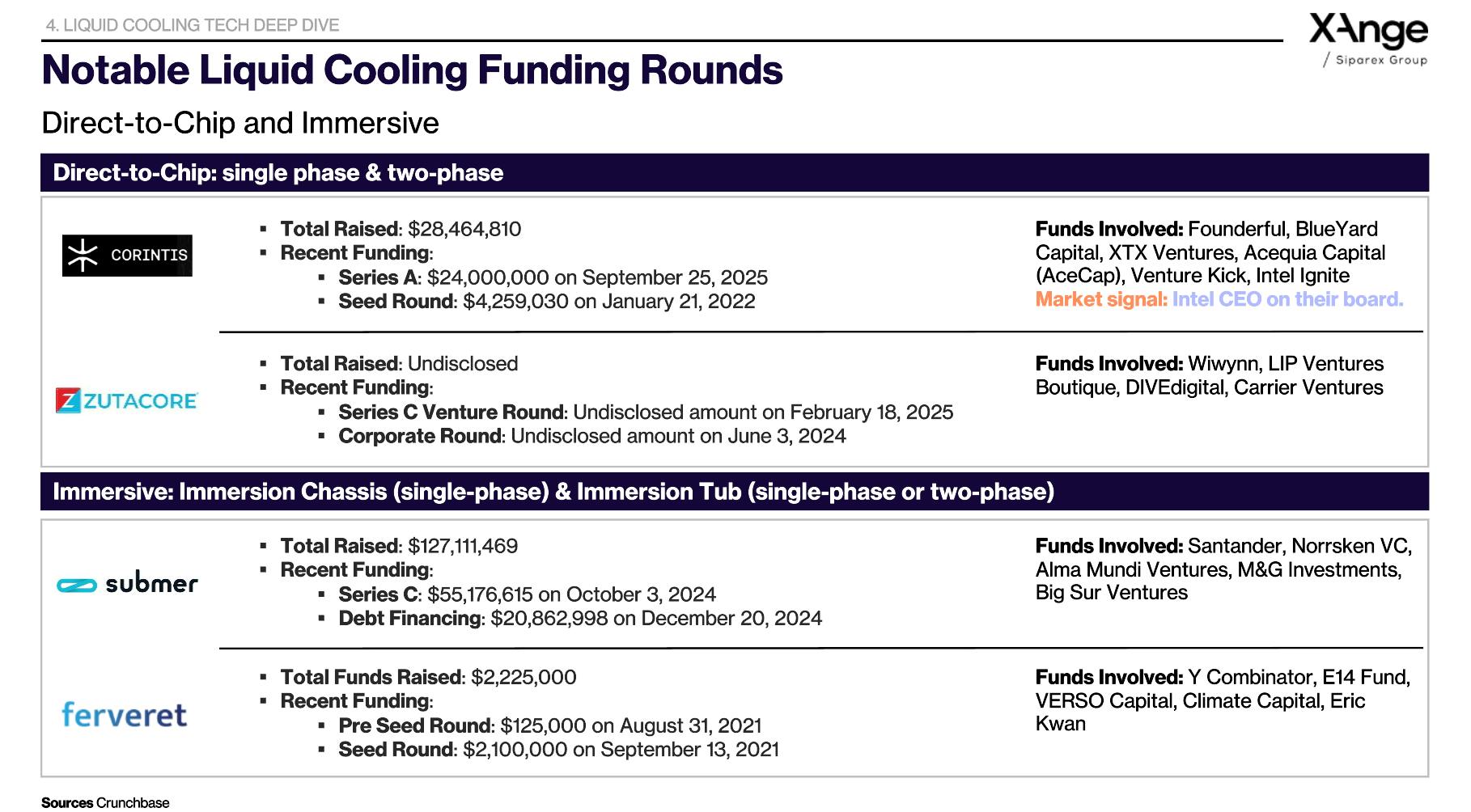

Notable Liquid Cooling Funding Rounds

Corintis, Zutacore, Submer, Fevert, amongst others are notable startups in the field.

As part of their development, they are deployed for testing in commercially operational environments. Notably: GRC (Intel, Hewlett Packard Enterprise), Corintis (Intel, Microsoft), Iceotope (Intel), CoolIT Systems (DELL), Submer (Intel), and LiquidStack (NTT).

Challenges & Opportunities from Liquid Cooling

Drivers of Liquid Cooling Adoption:

- Need for improved cooling performance in high-density workloads

- Flexible on processing power, energy use, hybrid & future proof

- Cost efficient

- Stricter efficiency and sustainability regulations

Barriers to Adoption:

- Hydrophobia: Reluctance to bring liquid close to IT racks

- Operational complexity: New procedures, skills, and dual cooling systems

- Retrofitting limits: Existing air-cooled facilities costly to adapt (mostly applies to immersion)

- Resiliency concerns: Redundant parts but still single points of failure

- Limited IT system compatibility: Few OEMs natively support liquid cooling

Final Words - Our Scorecard

Liquid Cooling is a VC Play and Big Deeptech Opportunity

Article written by Diane Roux, Analyst at XAnge