Large Language Models (LLMs) don’t reason — they autocomplete. And they autocomplete very well, provided they’re given the right context. That’s why the most important infrastructure shift in AI right now isn’t “bigger models,” but the tooling that helps them work reliably inside organizations.

This is where LLMOps comes in — the stack of frameworks, observability platforms, context management systems, and security layers that transform an autocomplete engine into a functioning AI agent.

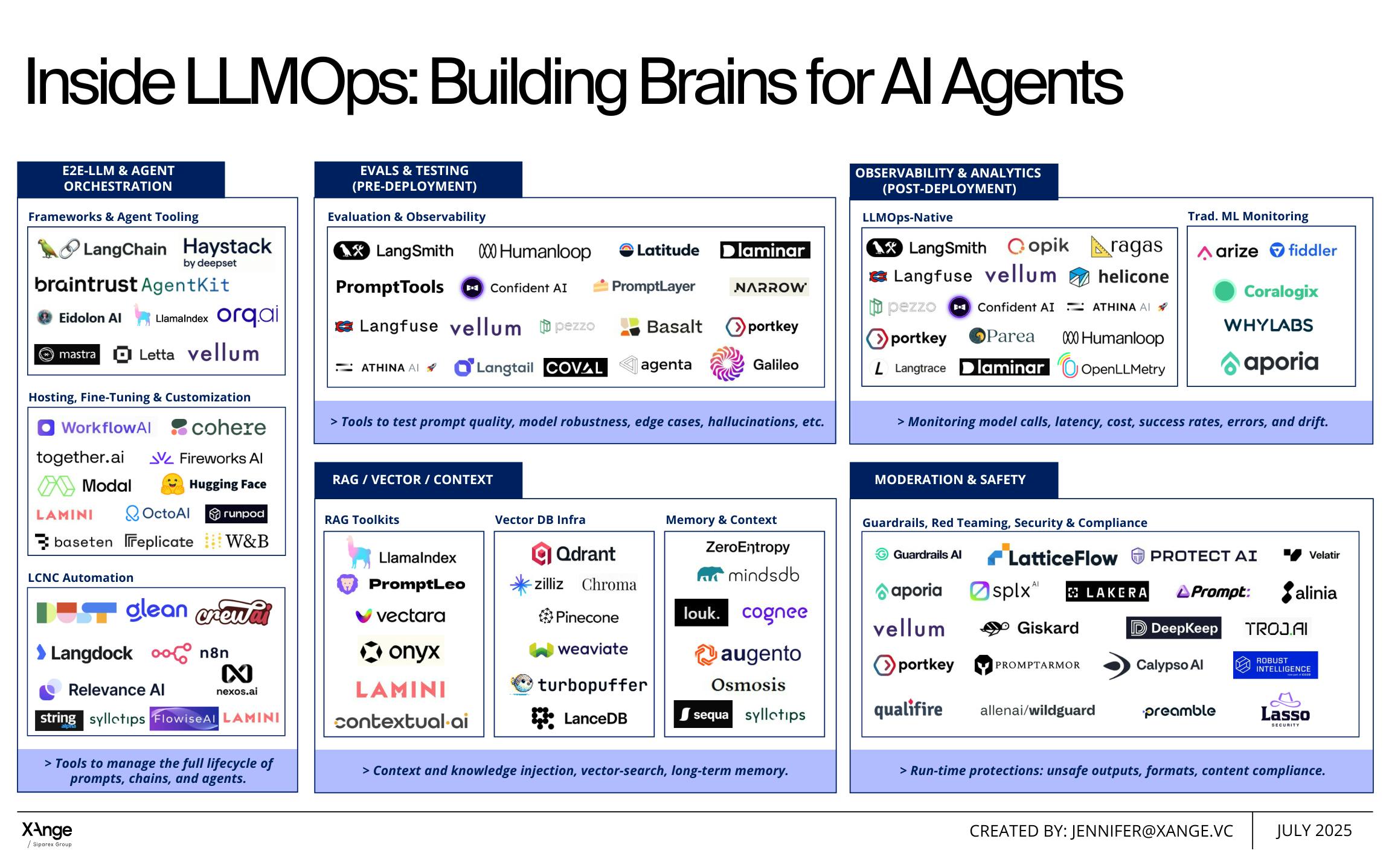

The market is young, fragmented, and fast-evolving. But if you squint at the tooling map (see below), you can start to see the outlines of a full-stack ecosystem emerging. And from a venture perspective, it’s an ecosystem moving from experimentation into adoption — one that’s attracting significant capital flows, rapid startup formation, and early consolidation.

E2E LLM & Agent Orchestration

This is where AI agents are born. Frameworks like LangChain, Haystack, and LlamaIndex help orchestrate LLM-powered workflows. Hosting and customization platforms (Cohere, Hugging Face, Fireworks) let teams fine-tune and serve models. Meanwhile, low-code/no-code orchestration tools (Flowise, CrewAI, Langdock, n8n) make it possible to chain together agents and automate across enterprise systems.

▪️Think of this as Zapier meets AI — structured flows, not prompt hacks.

Evals & Testing (Pre-Deployment)

Evals & Testing (Pre-Deployment)

LLMs can hallucinate, fail silently, or break under edge cases. Pre-deployment evaluation platforms like LangSmith, Humanloop, Galileo, and PromptLayer give teams the ability to stress-test prompts, evaluate robustness, and debug workflows before shipping.

▪️ This layer is the equivalent of CI/CD for AI agents.

Observability & Analytics (Post-Deployment)

Once deployed, agents need monitoring like any mission-critical system. Tools like Langfuse, Pezzo, Parea, and OpenLLMetry track latency, costs, success rates, and error modes. Traditional ML observability players (Arize, Fiddler, WhyLabs, Aporia) are expanding into LLMOps too.

▪️This is where AI teams finally get dashboards to debug why their “assistant” just cost $2,300 on a single query.

RAG / Vector / Context

If LLMs lack context, they fail. Enter Retrieval-Augmented Generation (RAG). Vector DBs (Weaviate, Pinecone, Qdrant, Chroma) and memory systems (Letta, Mem0, Augmento) inject context into models in real-time. On top, RAG toolkits like PromptLeo, Onyx, and Contextual AI orchestrate retrieval pipelines.

▪️This is the heart of Context Engineering — feeding the model exactly what it needs, no more, no less.

Moderation & Safety

With great context comes great risk. Attack surfaces expand as models ingest live data. Companies like Lakera, Giskard, LatticeFlow, and Prompt Security focus on preventing prompt injection, context poisoning, and compliance failures. Others like Guardrails AI and Protect AI provide runtime filters for unsafe outputs.

▪️ Think of this as the firewall and governance layer of the stack.

Why This Map Matters

Every startup claims to be “AI-powered.” But the defensibility doesn’t come from plugging GPT into your SaaS. It comes from building robust context flows, debugging tools, and protective guardrails.

For founders, context is the moat. Anyone can call an API, but designing persistent memory, proprietary retrieval, and secure ingestion is hard — and deeply valuable.

For investors, LLMOps is the classic “picks-and-shovels” play. Market sizing estimates suggest the AI infrastructure layer will grow to $30–50B by 2030, with LLMOps tooling expected to capture a meaningful share of that spend. VC interest reflects this: the last 18 months have seen over $2B raised across more than 100 companies in observability, RAG, and orchestration tools alone. As Martin Casado (a16z) puts it:

“The infrastructure is where the value is … Every time you have a platform shift, you’ll get a new set of infrastructure companies … the value’s going to accrue largely to the infrastructure because that’s where the differentiation ends up happening.”

The pattern rhymes with DevOps: in the early 2010s, thousands of fragmented DevOps tools emerged, before category leaders consolidated the market (think Datadog, GitLab, Snowflake). Today we’re at a similar inflection point in AI infrastructure: fragmentation, rapid adoption, and a race to standardize. The winners will not only capture massive enterprise budgets but also define the technical norms for how AI agents operate at scale.

Final Thought

AI isn’t here to take your job… yet. But the difference between a glorified autocomplete and a genuinely intelligent assistant is context + control.

The companies in this market map are racing to solve exactly that: how to give AI agents the structured environments, observability, and safeguards they need to act more like teammates — and less like overconfident interns.

The next AI winners won’t be defined by model size. They’ll be defined by how well they manage context, workflows, and trust.

Welcome to LLMOps.