Enterprise AI adoption has moved from experimentation to embedded behavior. LLMs are now present across workflows, products, and internal tooling, often faster than security organizations can meaningfully govern them. The result is a widening gap between how AI systems are used and how they are secured.

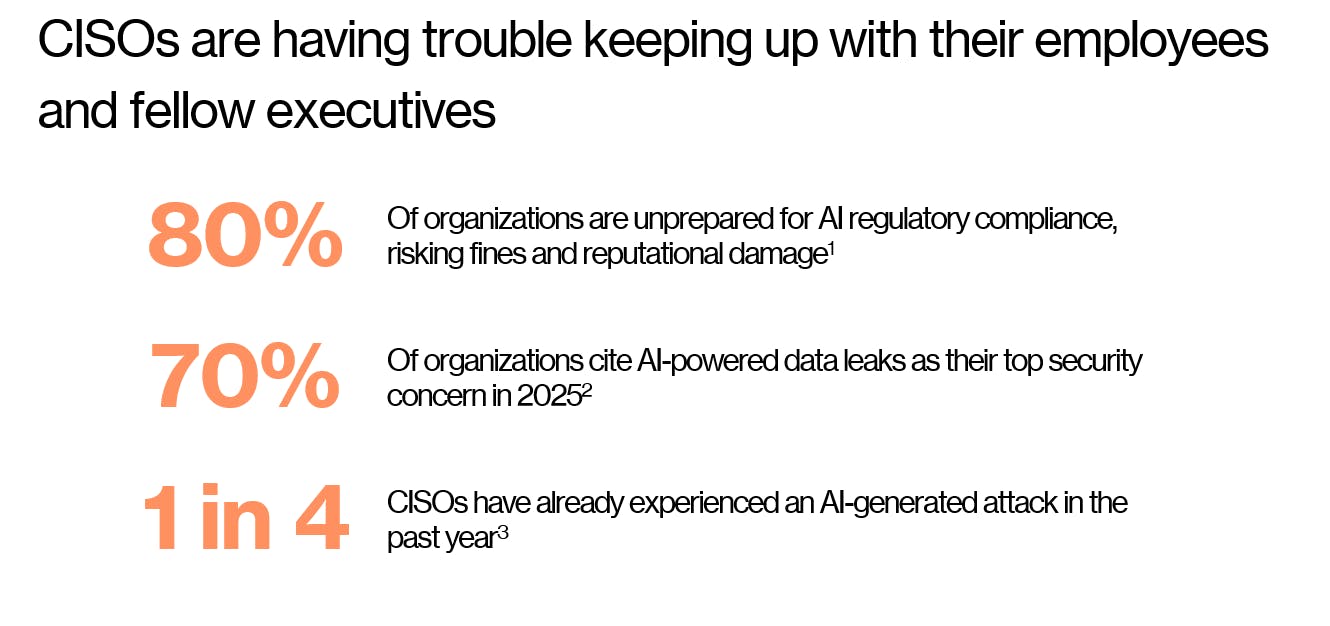

This is not a theoretical problem. A majority of enterprises admit they are unprepared for AI regulatory compliance: a 2025 survey of more than 200 global CISOs survey revealed 70% of organizations place AI-powered data leaks as their top security concern this year. On the attacker side, AI means attack proliferation. AI-powered data leaks and AI-generated attacks are already material events for CISOs.

It’s a perfect storm of increased risk and risk of a new nature that traditional security tooling was never designed to address.

Two categories of risk, one common blind spot

In practice, AI security risk clusters around two vectors.

First, unregulated AI usage. Employees adopt public LLMs, browser extensions, and copilots faster than IT can approve them. Sensitive data is pasted into prompts, outputs are reused downstream, and no centralized visibility exists. Shadow IT becomes shadow AI.

Second, even approved AI systems are fragile. LLM-powered applications and agents are uniquely vulnerable to prompt injection, data leakage, poisoned dependencies, excessive autonomy, and unverified outputs. These systems do not fail like traditional software. They fail linguistically, probabilistically, and often invisibly until damage is done.

Among these risks, prompt injection stands out as the most structurally dangerous. When an AI system can ingest untrusted content, access private data, and communicate externally, it creates a “lethal trifecta.” In that configuration, a single malicious input can hijack the system, extract confidential data, and exfiltrate it without triggering conventional security alarms. Real-world incidents at large technology companies have already demonstrated this exact playbook.

Within 24 hours of Google releasing its Gemini-powered AI coding tool Antigravity, a security researcher demonstrated that a single malicious code snippet, once marked “trusted,” could reprogram the agent’s behavior and install a persistent backdoor. The AI gained ongoing access to local files, survived restarts and reinstalls, and could be used to exfiltrate data or deploy ransomware. No infrastructure exploit was required. The failure emerged directly from agentic autonomy, broad system access, and misplaced trust in unvetted inputs.

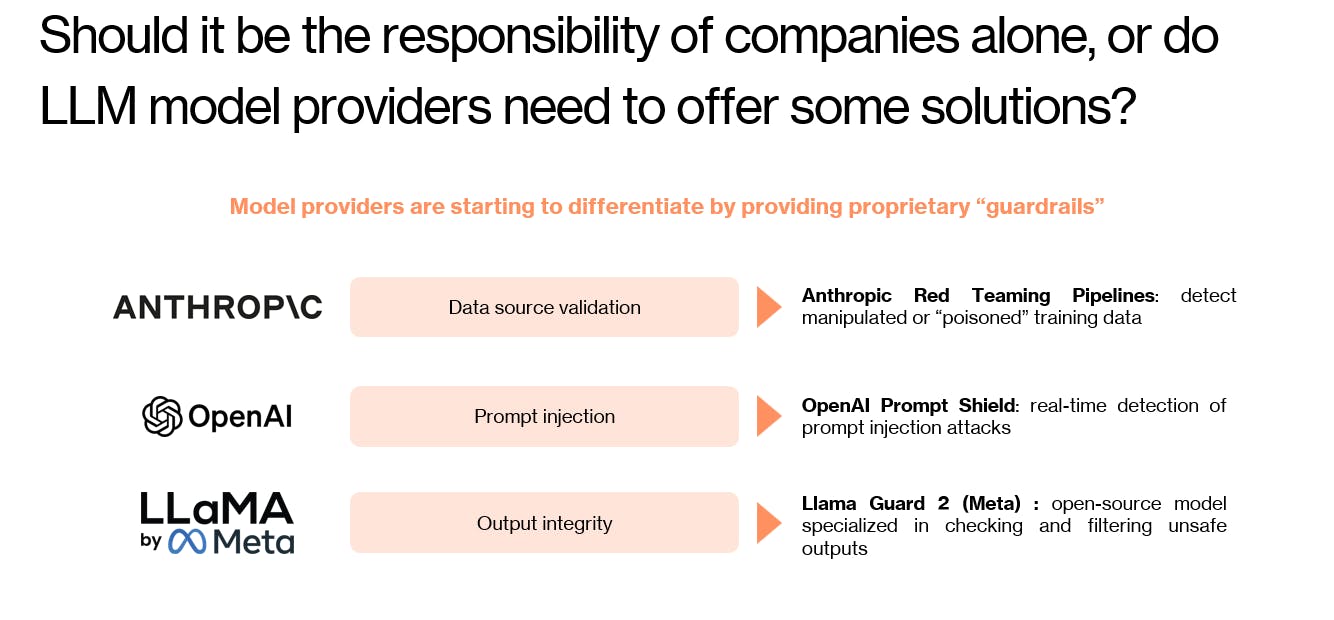

Model providers have some solutions, but they won’t solve this alone

Model vendors like Anthropic, Meta & Open AI are responding. Guardrails, red-teaming pipelines, prompt shields, and output filters are improving rapidly. These efforts matter, but they are insufficient.

Model-level safety only governs what the model generates, not what happens around it. Enterprise AI systems live inside complex application stacks with custom data flows, permissions, APIs, and business logic. Attacks are context-specific, and malicious prompts often look indistinguishable from benign ones, especially when embedded in code, documentation, or third-party content.

Expecting frontier models to fully understand and enforce each organization’s security policies is unrealistic and AI security cannot be outsourced to the model layer.

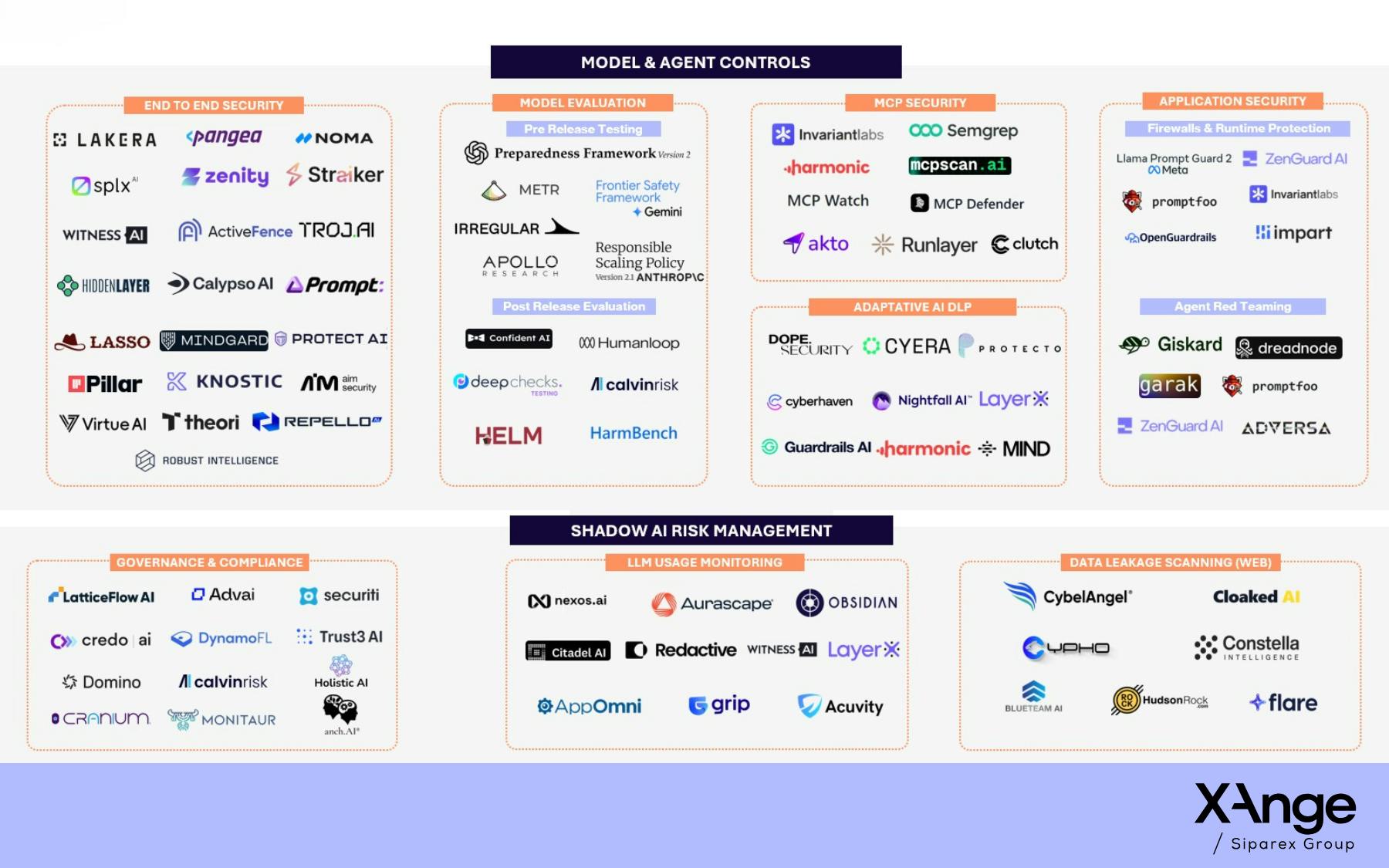

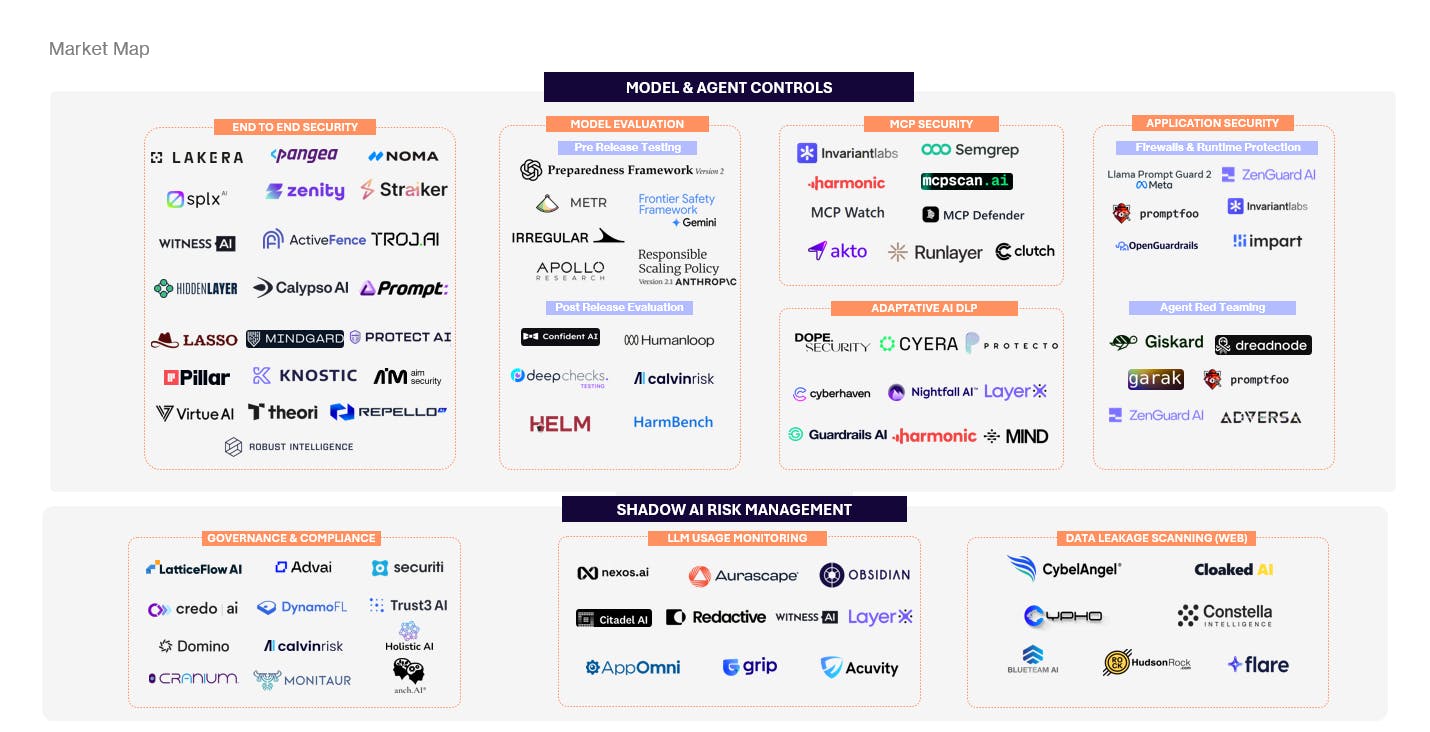

Enter: the emergence of an AI security stack

This gap is driving a structural shift in the security market.

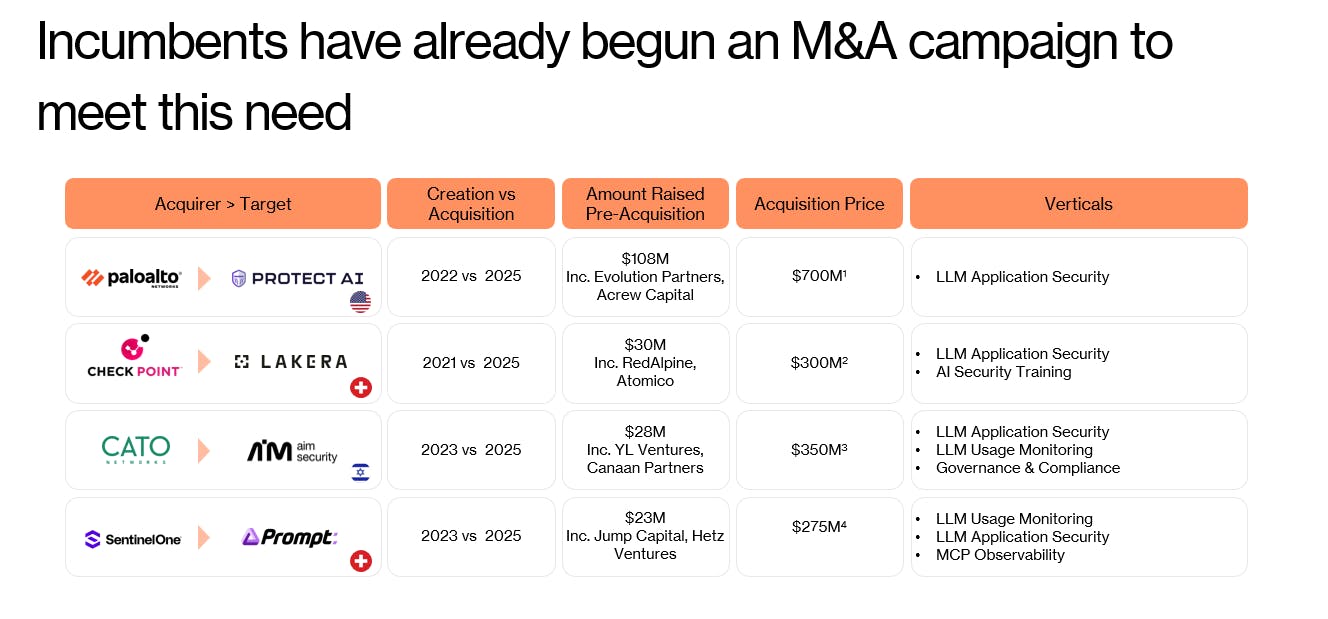

Incumbents have recognized this shift and are acquiring aggressively (see: Palo Alto’s $700M acquisition of Protect AI this year), signaling that AI application security and agent control are becoming core security primitives rather than niche add-ons.

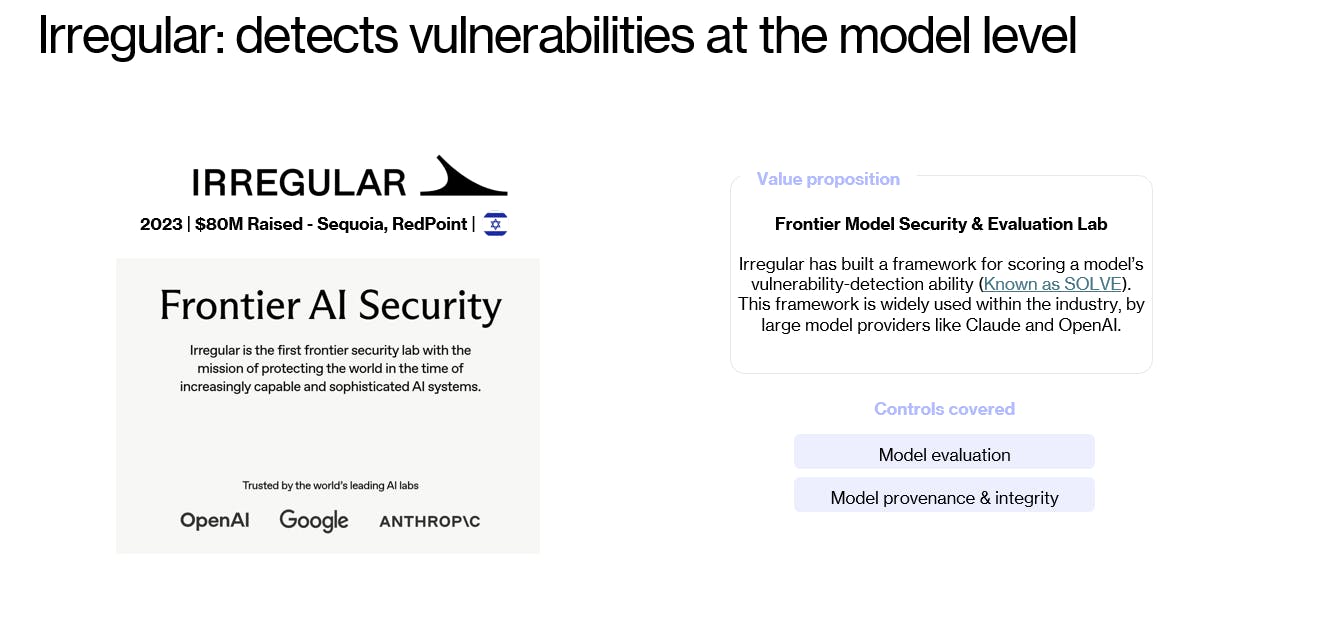

The first wave of AI security focused on model evaluation, provenance, and red teaming. These tools (like Irregular) are answering an important first question: “Is this model safe in principle?”

A second wave is emerging, oriented around enterprise and application controls. These solutions secure AI where it actually operates: at runtime, inside workflows, agents, and data paths. They govern which tools agents can access, sandbox execution, enforce policies in real time, monitor usage, and detect data leakage across both approved and shadow AI.

· Khosla-backed Runlayer has built a security platform purpose-built for MCP infrastructure, controlling and verifying agent tool calls, sandboxing executions, and providing deep visibility into agent activity to prevent unauthorized or dangerous actions at runtime

· Straiker’s end-to-end agent protection platform enforces real-time policies on agent actions, tool use, and browser behavior, with a focus on preventing data leakage while maintaining full auditability across workflows

· Witness has an enterprise AI governance and control approach – giving security teams visibility into AI usage across the organization, enforcing centralized policies for public and private LLMs, and managing compliance and employee use to reduce shadow AI risk

What CISOs and builders should internalize

For security leaders, the takeaway is clear. AI expands the attack surface beyond infrastructure and code into language, autonomy, and decision-making. Controlling risk requires visibility into AI usage, constraints on agent behavior, and enforcement at the application layer, not just at the model boundary.

For builders, this is a generational opportunity. Enterprises are actively looking for ways to adopt AI without sacrificing control, compliance, or trust. The winners will not be those who promise perfectly aligned models, but those who give organizations the tools to safely operate imperfect ones.

Security in the age of AI is no longer about preventing systems from being breached. It is about preventing systems from being convinced.

Are you a European founder building in the AI security space? We’d love to hear from you!

This article was written with the contribution of Timothée Bourgeois.